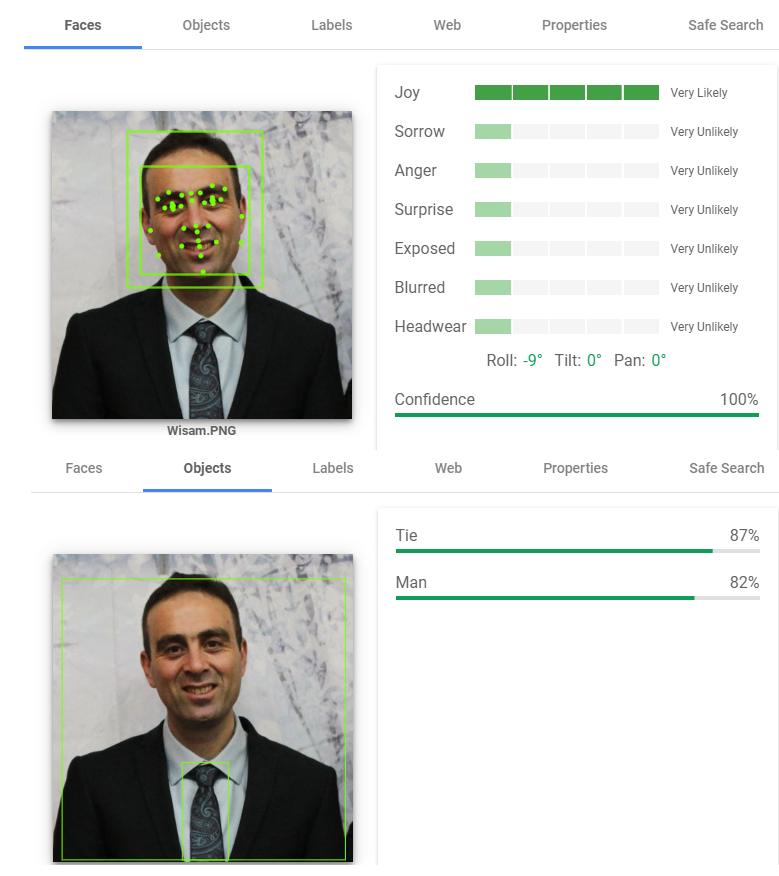

A very interesting machine learning technology launched by Google this week Vision AI (click on the demo link then drag and drop your photo for analytics). There is also an API available for this tool.

Wisam Abdulaziz

Keyword Research and Content Mapping

Posted on April 9, 2019It is not difficult to explain to anyone what is a keyword, most of us use Google everyday and we type words/questions in the search box to find information, answers, services and products. When we try to market a service or a product in search engines it is very important to find keywords that can make a difference to the bottom lines (leads and sales), finding relevant keywords to any website is not a difficult task but understanding the intent behind those keywords and map that intent to the marketing funnel needs some extra efforts.

Looking at the funnel above can raise a simple question, why do we need to target keywords in the awareness and interest stage, should not we target bottom of the funnel keywords only? There are few reasons for that:

- There is a limited inventory for bottom of the funnel keywords for every business

- Bottom of the funnel keywords are normally very competitive and difficult to rank for especially for new website

- Bottom of the funnel keywords do not fit informational content like blog posts and articles which are very important component of SEO

Before going through examples that we can use for each stage, there are three attributes that you need to keep in mind for a keyword that will help you to do the right mapping:

- Intent, use your judgment to decide the location of a keyword in the marketing funnel, words like buy, hire, services are buying signals

- Search volume (the number of people that search for a keyword on a monthly basis)

- Keyword difficulty, this metric is an estimate provided by third party tools like SEMrush predicts how difficult it is to rank for a specific keyword

- Number of words in a keyword, normally more words means less competition (long tail keywords)

Awareness keywords (top of the funnel):

Let us assume you offer SEO services in Toronto and see what keywords we can use for every stage of the funnel. Thankfully it is 2019 and the level of awareness with SEO is really high but there are still many businesses that do not know about it or know a little about it.

We can target people that never heard of SEO using digital marketing content that speaks to SEO, examples:

- How to rank higher on google

- What is Digital marketing

For people that heard about SEO but they are seeking more information:

- What is SEO

- How to do SEO

Articles and blogs are the best form of content to use to target awareness keywords, working with a SEO client that doesn't have a blog or an article section to create awareness is almost impossible.

For clients that sell popular products and services (dentists, lawyers etc) awareness might not be required but blogging on a regular basis is still recommended to target top of the funnel keywords (writing interesting educational topics to their users).

Expect a very low conversion rate for top of the funnel keywords, you need to target highly searched keywords or a large number of keywords at this stage to make it up for the low conversion rate. There are many benefits targeting top of the funnel keywords that go beyond short term conversion rate:

- Establishing authority in the space

- It is easier to find low competition keywords that can fit the awareness stage, there is almost unlimited inventory of them

- Blogging on a regular basis will send freshness signals to search engines

- On average, it takes approximately five to seven touchpoints to close a sale and a visit from those keywords will count as one of them

It is strongly recommended to use marketing automation to nurture top of the funnel traffic and move it down in the marketing funnel.

Interest keywords (middle of the funnel):

Some people also call them also consideration keywords, they are used to target users that become totally aware of what they need to solve their pain point, in our example the client now is aware that they need to do digital marketing to keep growing their business and they need to hire someone to do that for them as they do not have the expertise themselves or in-house. Examples of the keywords we could be used for this stage:

- Digital marketing agencies

- Online advertising services

In general marketing or product pages will be used to target those keywords, in some cases blogs or articles could be used also depending on the keyword. A better conversion rate is expected from those keywords comparing to the awareness keywords but they will be more competitive with a limited inventory.

Decision keywords (bottom of the funnel):

At this stage the user (potential client in our SEO example) decided (possibility after a lot of research) that they need search engine optimization >> to improve their ranking >> that can lead to a relevant high conversion traffic >> which will lead to business growth (in other words they will make more money), the reason I mapped this thinking process that way (which I recommend sharing with the client) is to make sure that the client has the right expectation about the service, every client's ultimate goal is making more money but our accountability as SEOs at the beginning of the project is to rank and drive relevant traffic, being accountable only for revenue is not fair as conversion rate has too many variables (UX, prices, reputation etc) beyond our control. Examples of keywords to use in this stage:

- SEO company Toronto (location is a very strong buying signal)

- SEO services

- SEO company

The home page, marketing or product pages will be used to target those keywords, blogs and articles do not fit well here. A better conversion rate is expected from those keywords comparing to awareness keywords but they will be more competitive with a limited inventory.

If the conversion rate is still low causing a very high CPA (cost per acquisition) here are few things you can suggest to the client:

- If you see any glaring UX issues (e.g. missing call to action above the fold) suggest fixing them otherwise propose a UX audit

- If a call tracking is implemented with call recording listen to few phone calls and make sure they are answered professionally

- Implement user engagement solutions like exit pop up or chat

- Apply a competition analysis to find out if the client's pricing is competitive and if they have a unique selling proposition

The keyword research process:

Regardless of the available budget for any client a full keyword research is recommended at the beginning of any SEO project, here are few recommendations that can help with keyword research:

- Hold a meeting with the client to understand their business and find out if they interested to rank for any specific keywords. It is also important to understand their areas of operation (which countries or cities they can service)

- Requesting access to any paid search campaign will help a lot to find the right target keywords quickly (keywords with a high number of clicks and a high conversion rate)

- Asking the client to provide few competitors to be used later in a ranking competition analysis

After getting the information above we should be able to come up with few seed keywords, the seed keywords for a SEO company will be something like:

- SEO

- Internet marketing

- SEO services

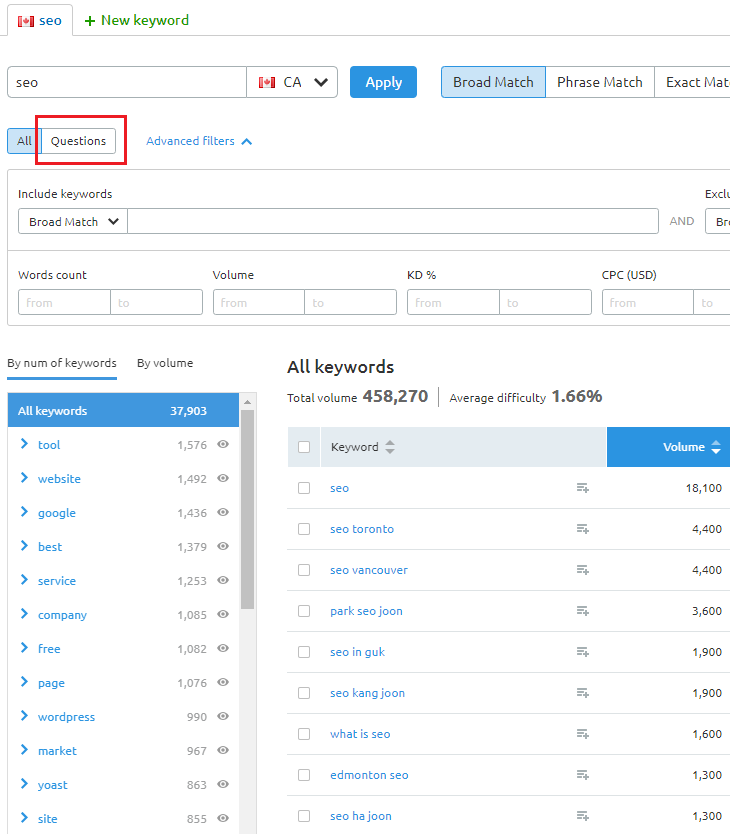

The seed keywords will be used in your favorite keyword research tools (e.g. SEMrush, Ahrefs or Google Keyword Planner), I will be using SEMrush for this example

With some work on the filters available in this tool like:

- Number of words

- Questions

- Search volume

- Country (in my case I have chosen Canada)

You should be able to generate a list of keywords that can fit all marketing stages (top, middle and bottom of the funnel).

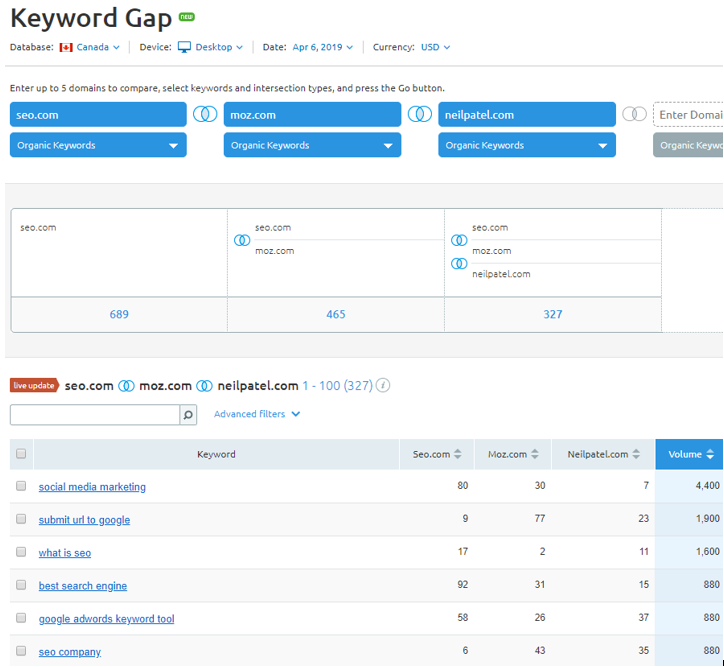

Keyword Gap is another tool that is available in SEMrush that can help a lot with keywords research using ranking competition analysis

Please note that there is some vetting required here to refine the keyword lists that SEMrush produces, SEMrush provides Excel exports for all those lists making easier to work with them. After vetting is completed you should have a list of keywords in three groups:

- Bottom the funnel keywords, those are the most important keywords where the home page and other key pages will be used to target them

- Middle of the funnel keywords

- Top of the funnel keywords that will be used in the blog calendar if the client decides to blog

Tow factors will decide which type of keywords you can target first when you start a project:

- The level of authority available for the website comparing the difficulty of the keyword (SEMrush provides a keyword difficult score)

- The budget available for the project

Here are few scenarios you can expect:

- Low budget project and low authority domain with difficult keywords: Start the project with easy keywords in the bottom or middle of the funnel and long tail keywords (low search volume relevant keywords with low difficulty and multiple words).

- Healthy budget project and low domain authority with difficult keywords: Choose easy keywords in the bottom or the middle of the funnel and provide a content plan to target top of the funnel keywords

- Healthy budget project and high domain authority with difficult keywords: Choose a mix of difficult and easy keywords in the bottom and the middle of the funnel and provide a content plan to target top of the funnel keywords

At this stage we should have a list of target keywords and we can move to the content mapping process where we run our target keywords against the list of keywords that the website is already ranking for (we can get this list from Google Search Console), with some Excel processing like using VLOOKUP we should be able to split the target keywords into 3 groups:

- Keywords that already ranking somewhere (not top 5 results), already have good content available for them and their landing pages are not used to target other important keywords (those keywords will be placed in the queue for on page optimization)

- Keywords that are ranking some where (not top 5 results) but their landing pages are used to target other more important keywords, those keywords will be considered content gaps or content opportunities and new pages will be created for them

- Keywords that do not rank anywhere and there is not landing pages to target them, those will be considered content gaps and new content will be recommended for them

Additional recommendations:

- Similar keywords that could be targeted using one page must be grouped together (no need to create a dedicated page for every close variation of the same keyword)

- While doing on-page SEO on existing pages it is very important to look at all keywords each page ranks for and make sure not to de-optimize the page for high traffic keywords

- Keywords that are not in your target keywords list that are ranking somewhere (not top 5 results) must be be added to the on-page SEO queue (low priority task)

- Keywords that rank in the top 5 spots, on-page SEO should be done if need be. CTR optimization (on-SERP optimization) is recommended if those keywords have a low CTR

- Keywords that rank on the first page and trigger featured snippets like answer box (SEMrush can produce this list) must be optimized for the answer box

- Keywords that trigger Google local three pack (SEMrush can produce that list) must be used to optimize GMB (Google My Business), those keywords could be used to produce city pages (a dedicated page optimized for each major city in the area of operation)

Finding the right keywords that take in consideration business priorities, the current level of authority, the keyword difficulty and the existing performance is the foundation of a successful SEO project

Interactive Onpage Optimisation and Content Maintenance

Posted on April 3, 2019Interactive optimization and content maintenance start when:

- The SEO technical fundamentals are well optimized (Crawlable, indexable website with a SEO friendly CMS)

- Content creation and on page optimization are completed for top targeted keywords

- There is enough data in Google Search Console (preferably 13 months or more) to conduct content performance analysis

The main reason I call this stage interactive optimization is that it can start only few months (1-3 months) after doing the first wave of SEO where search engines will complete processing the new changes so we can evaluate if our SEO work:

- Generates the planned results (e.g. top ranking for target keywords and more traffic from different variations of keywords)

- Needs more tweaking (e.g. there is a progress but we did not hit the expected results, content enhancement will be required to improve performance)

- Creates new opportunities (e.g. some unexpected keywords are ranking for content that is not optimized for them, new content will be created to target them)

Evaluating the results of an SEO project:

There are too many ways to evaluate the success of a SEO project but for the sake of interactive optimization the best way to evaluate success is the ranking improvements for the target keywords before and after the project, if most of the target keywords reached the desired ranking (top 5 spots in the SERPs for example) then it is missions accomplished we just need to monitor those keywords and move on to another project, on the other hand if the ranking did not reach the desired results we need to reassess the situation and try different actions, the most popular actions to try in this case are:

- Enhance the content and optimize on-page elements (it is very important to check the top ranking websites for the target keywords and better their content and on-page work)

- Increase the number of inbound links pointing to the content/pages where the target keywords did not achieve the desired ranking (could be done using the website navigation or the blog contextual links), make sure to use a keyword rich anchor text while doing that.

- Increase the number of external links pointing to the content/pages where the target keywords did not achieve the desired ranking

- Keep working on the website overall authority and branding

If all controllable SEO elements (content, on-page, technical SEO and internal linking) are optimized well but the desired ranking for the some target keywords is still not achieved then most likely the authority level required to rank for this keyword is not enough for this website/domain, going after a less competitive keyword or a different variation (less competitive) of the same keyword is recommended in this case.

Exploring New SEO Opportunities:

To start this process we need a keyword mapping document preferably in excel that lists all the keywords the website ranks for, the URL, Search volume, impressions, CTR. Google Search Console is the only place where you can find all this data, SEMrush report also includes the majority of this data (using both is recommended as some data like answer box and local pack are not available easily in GSC). Once this document is available you need to create 7 categories for those keywords:

- Keywords that rank well and they have a good CTR (just monitor them)

- Keywords that rank well and they have a low CTR (do on SERP optimization for them)

- Keywords that do not rank well and they are the target keywords for that landing page (you need to apply the recommend actions listed in the previous section)

- Keywords that you did not plan to target but search engines find your content relevant to them, in most cases the landing page will not be able to target them while keeping the page optimized for the existing target keywords, those keywords will be marked as content gaps

- Keywords that trigger a local 3 pack (those will be used to optimize Google My Business/GMB profile)

- Keywords that trigger an answer box or any other featured snippet (featured snippets optimization is recommended if they rank at the top of the first page)

- Knowledge based keywords like when, what, where, how type of keywords (those will be used in the blog calendar)

Based on performance and priorities a new SEO project must be created to handle the new available findings in each category.

Content maintenance:

Time does not always work well for SEO, after few years working on a website few things will happen:

- Search engines may change their algorithms and guidelines in a way that can impact negatively ranking and traffic

- Content can expire, not all content is evergreen

- Even for evergreen content the bar will be risen in some cases by other websites that enhanced/improved their content (e.g. adding media files like videos), this will result in ranking drop for less maintained content

Monitoring content performance and reacting quickly to red flags will be key to retain and improve organic search engine traffic. Thankfully the new version of GSC with some crawling tools have made content monitoring easier, what do we need to monitor?

- Keywords and pages that are losing traffic and ranking year over year (you can monitor that month over month but you need to mind seasonality), finding the reason behind that decline will decide next actions (e.g. if the content has expired then refresh the content)

- Keywords that rank on the second page, for those keywords content enhancement will be helpful, if the page is not focused enough on those keywords, creating a laser focused page to target them is a good option

- Pages that are not getting any impressions (those are normally pages with thin content or very old content that you need to improve, remove or redirect)

Interactive SEO optimization is an endless task especially for large websites and it is the main reason for hiring an in-house SEO specialist

On Page SEO

Posted on April 2, 2019On-page SEO refers to any search engine optimization work you do on a website that is fully under your control (mainly on the content and the source code of the page). At this stage I assume you have completed the keyword research and the content mapping which should enable you to produce a list of URLs with one or two keywords to optimize each URL for.

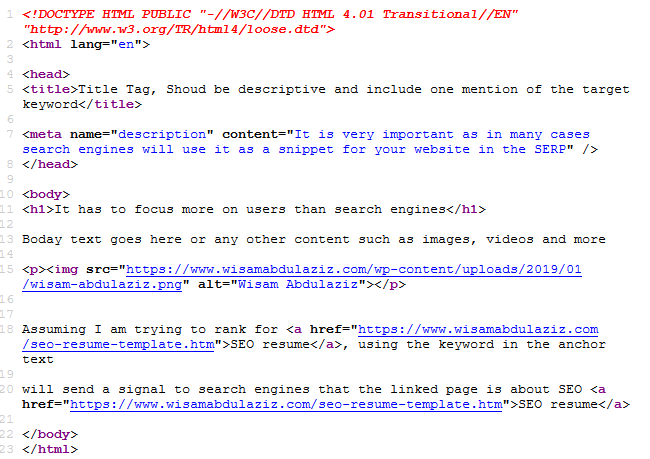

Before start doing any on-page SEO you need to have some understanding to what constitutes a web page, a web page is simply a combination of HTML code, text and embedded resources (JavaScript files, CSS files and more) that are passed from a server to a browser, the browser will be able to render that combination of codes and resources into the visual web page that you see everyday, on-page optimization focuses mainly on the visible text on the page and the HTML code. In order to view the source code of a web page just do a right mouse inside the page >> click view source and you will see a code similar to the code below (just a basic page I created as an example):

This code will render in the browser like this:

It is very important to understand the visibility of each element in the HTML code to users and search engines, there are three possibilities for a web page element visibility:

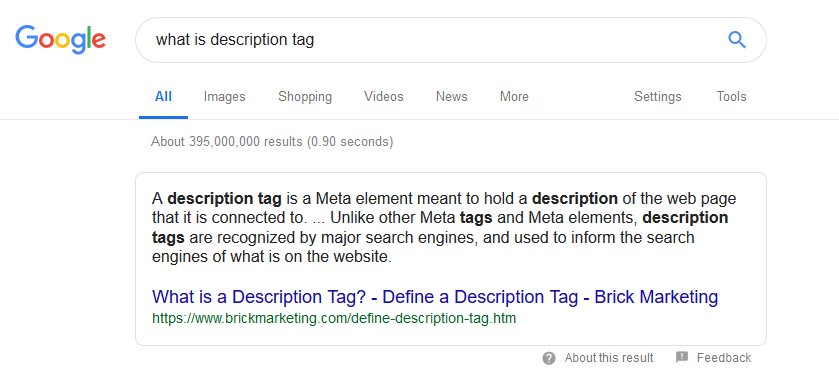

- Visible to search engine crawlers only not to users but it will be used in the search engine result page SERP (e.g. description tag)

- Visible to both users and search engines (e.g. H1 tag)

- Visible mainly to search engines and social media bots with less chance to show on the SERP (e.g. structured data and rich snippet)

On-page optimization cannot be done in isolation of a marketing plan, content should appeal to users and encourage them to take certain actions, if the element is invisible to users we can possibly optimize it more for search engines.

On-page SEO in few words is making sure that key page elements like title tag, H1, URL and page content include directly or semantically the target keyword, so the keyword mention is important but it should not be done at the expense of user experience:

- If the element is visible to users on your page you have to be extremely cautious (not to damage user experience while optimizing) and focus more on users than keyword mentions (H1 tag for example)

- If the element is not visible on your page but visible in the SERP (ALT tag for example) you can be more aggressive with keyword mentions but you also need to focus on making that element more enticing to page to click

Title tag:

Visible to search engines and has a significant weight on ranking, not fully visible to users as browsers will show it in the uppers status bar and it is used in many cases by search engines as the clickable text that leads to a website in the SERP

Optimizing the title tag is a tricky process as search engines will show only 50-60 characters or no more than 300 pixels which could be 5-10 words only depending on the size of each word. The first segment of the title tag should describe what is the page about (a keyword mention is recommended in this segment) the second segment could be generic like including the brand name, example (Assuming I am optimizing a page for SEO expert):

SEO Expert 15 Years Doing SEO - Wisam Abdulaziz

You can see that the title tag includes one mention of the keyword, it has a marketing message (15 years doing SEO) then another partial mention of the target keyword (SEO), the brand name was placed at the end

Description tag:

Visible to search engines only, doesn't have a significant weight on ranking, it is used in many cases by search engines as a snippet in the SERP which gives it a huge value from a click through rate (CTR) prospective.

Similar to title tag there is a length limit (155-160 and can go longer than that in some cases), this is a decent real-estate to deliver a good marketing message that entices people to click on your listing in the SERP, a keyword mention is still recommended as Google normally bold searched keywords inside the snippet, example:

A long career as a SEO expert where I helped more than 1000 clients to gain more exposure on search engines that lead to more traffic and business.

I have one keyword mention and a marketing message.

H1 Tag:

Every page should have a heading, some CMS (content management systems) contain it in H1, H2, or H3 others will not use any Hx tags, regardless of how your heading is coded in the page this section will speak to optimizing the first heading of a web page. H1 tags have a premium location on the page, they are always located at the top (above the fold in most cases) and totally visible to users because of that user experience and delivering the right marketing message are more important than keyword mentions as people that read H1 tags are on your website and they are potential leads or customers.

H1 tag does not have a limit on the number of characters as search engines do not use it very often in their SERP but I still do not see it going more than 10 words, example:

Check Points That an SEO Expert Can Audit for Your Website

I have a keyword mention, I tried to create some curiosity so the user will continue reading the page

Body Content:

This is what matters most to both users and search engines, the first priority here is to create a winning content that gives users the best answer to their question (search query/keyword), first thing needs to be done here is searching the target keyword on Google and visiting the top 5 or even top 10 results to find out the characteristics of a winning content for your target keyword also find out what are they missing, examples of a winning content assessment:

- Average number of words is 500 words/page

- Average number of images is 5

- There is one video on each of them

- They are missing some statistics regarding that topic

- They are missing linking out to some great resources around that topic

Now start writing your content to better the current winning content, keyword mentions normally come naturally in the body content if not one or two mentions in a user friendly manner will be suffice.

Internal Linking:

Google started their search engine based on a patent called Page Rank, which is a number from 1-10 that gives pages with a lot of links (external or internal links) more value, this concept applies also to internal linking optimization, the more internal links a page has the more authority or importance it will gain from Google which will give it a better chance to rank.

The anchor text of the internal links will pass a strong relevancy signal to search engines about the content of the destination page, so it is important to keep it as keyword rich as possible.

The easiest way to understand internal linking optimization is visiting a Wikipedia page try this one you can see every time they have content/page related to any phrase in that article they will link to it, this contextual interlinking will be passing weight or page rank to the linked pages it will also pass relevancy signals by using the keyword as an anchor text

3 ways to do internal linking:

- The Wikipedia style of internal-inking called contextual internal linking

- Optimizing the website information architecture (IA) by linking the most important pages from the upper navigation using keyword rich anchor text, while doing IA optimization it is important to keep user experience in mind as the upper navigation' main function is helping users to find content easily and quickly on the website

- Breadcrumbs navigation is a good tool that can improve user experience and help with keyword rich internal linking

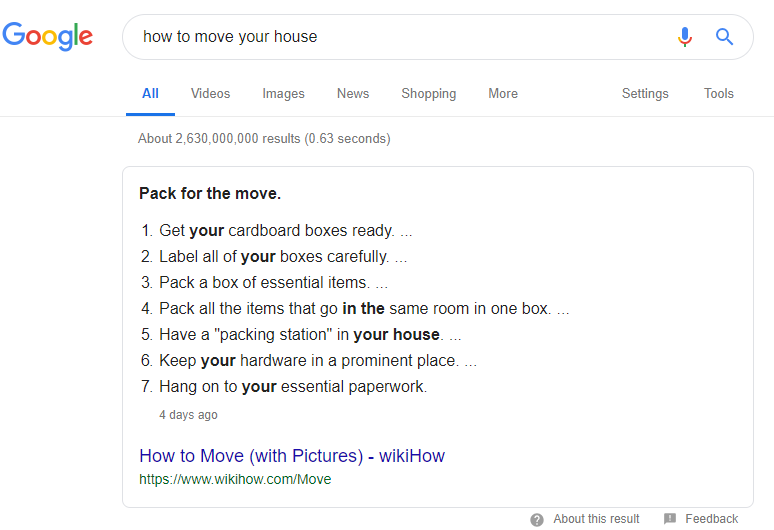

Answer Box Optimization:

Goolge has 20+ types of rich snippet (refers to any result that is not the blue link with gray text beneath it), the most popular rich snippet to optimize for is the answer box which normally ranks at the top of the page (called ranking 0) ranking in this position comes normally with a very high CTR that can reach 40%, the answer box in many cases will be used in voice search results on consoles like Google Home.

Picking the right keyword to target is going to be key to achieve results here, if you do not have any chance to rank for a keyword that trigger an answer box organically on the first page (preferably top 5 spots) then find another one as your chance to take that answer box is close to zero, do not listen to those who say it is a schema play and ranking doesn't matter, in more than 90% of the cases schema has nothing to do with answer box.

Recommendations to gain answer box ranking:

- Do the regular on-page optimization you do normally for any page as explained in this section (keyword mentions in title tag, H1, body etc)

- Optimize the opening paragraph or any other paragraph in the article to answer the question (in most cases it is the keyword that triggers the answer box)

- Keep the content well structured and organized (use spaces, breaks, <ol>, <p> and other mark up tags that make a post easy to read for humans)

Recommendations to gain a list based answer box ranking (with a list inside it):

- Same recommendations above for regular answer box, make sure to include a sentence that clearly defines the purpose of the list right before the first item

- Optimize the title tag and H1 to include keywords that normally trigger the answer box with a list (Example: most popular, things to do, best, types of, steps, facts).

- Each item on the list should be distinguish it from the rest of the text, (i.e. <h2>, <strong>, <li>)

URL Optimization:

The URL of a web page is not fully visible to users (browsers use it in their upper status bar where many users do not pay attention), search engines read it and use it sometimes in the SERP but it barely has any weight on ranking (it used to have a lot of weight back in the days). You can focus more on search engines while optimizing URLs, example:

www.wisamabdulaziz.com/seo-expert

Notes:

- Do not change the URLs for existing pages only for the sake of making the URL more descriptive or keyword rich (too many things can go wrong in a process like that)

- URLs are used by search engines as content identifier, all links will be counted to a URL, changing one letter in the URL without proper 301 redirect can cause page authority loss and potentially ranking loss

- Keep the URLs clean and make sure the URL is changing when people navigate to a new page, avoid using # tags or complex special characters and keep them readable for humans

Images Optimization:

The two image elements that could be optimized for an image are both invisible, ALT tag (invisible 99% of the time) and file name. Those elements must describe the image content in few words, if you can make them keyword rich that will be good if not just focus on keeping them descriptive to the image content

Finally you need to know that the on-page optimization process applies to many other platforms like:

- Google My Business

- Youtube

- App stores like Itunes and Google play

They have very similar elements to a web page like title and description, the concept of including keywords in them and making them enticing for people to click is the same. Few other elements that are not available in a web page but available in those platforms:

- Category

- Tags

- Thumbnail

User experience Conversion Rate and CTR Optimization

Posted on March 21, 2019Conversion rate optimization (CRO), UX and CTR optimization could be considered part of SEO now. SEOs are supposed to optimize websites to score well based on known ranking factors (Google uses 200+ of them but few of them are known to SEOs), but do search engines have any ranking factors related to CTR, CRO and UX? In the early days of search engines they did not use any user experience related ranking factors, but that has changed in the recent years, the ones that Google admitted using as ranking factors (with small impact or results) are:

- Website speed

- Secure websites

- Mobile fist index (they want to understand user experience with mobile phones especially above the fold area)

Let us remember also that Google bot has evolved a lot now, it can crawl, parse and render pages with better understanding of the layout (e.g. what content is above the fold, what is visible to users on the first load and what is not), understanding user experience on websites is becoming a high priority for Google especially in the mobile era where it is difficult to create a good user experience considering the limited screen space available for developers. The other looming question is whether Google going to use the user experience data available for them as ranking factors in the future if they are not already using them, Google owns :

- The most popular browser on the web (Chrome)

- The most popular email service (GMAIL)

- Cookies on almost every website on the web (Via Google Analytics, YouTube and more)

They can go beyond link profile and on-page SEO analysis to find quality websites, there are too many user experience signals available for Google now that they can use as ranking factors.

To make it clear here I am not asking you to be a UX expert, you just need to gain enough knowledge to cover the basics. There are four types of UX optimization that you can do and they have different level of difficulties and time requirements, I will walk you through all of them one by one and you can choose what to apply for each project depending on the needs and the available budget:

- Web design standards and convention of the web

- Experimental (using A-B tests and multivariate testing)

- Data driven

- User feedback driven

Web design standards and convention of the web

People visit 5-7 websites/day on average, most website follow very similar standards that become expected by most users when they visit a new website, some of them are:

- A logo on the left upper corner of the page that you can click on to take you to the home page

- Upper navigation menu in the header of the page, in most cases it has a contact us page

- A slider or a page wide image at the top is becoming popular in modern design

- A phone number placed in the header and the footer of the website and social media profiles linked from header or footer

- Websites should adapt to all device sizes (responsive design)

- A call to action is expected in the header area of the website (read more, get a quote etc)

- The message has to be loud and clear above the fold (marketing message, company vision, summary of the service)

By checking a website design against the conventions of web design you will be able to come up with few recommendations to improve user experience

Experimental A-B testing and multivariate testing

You can provide users with two different copies of the page (multivariate testing where the difference between the pages is not significant) using technologies like Google optimizer or even two significantly different pages (A-B testing), after you have enough data available you can find out the winning version (the one with less bounce rate and better conversion rate) and use it on the website.

Landing pages services like Unbounce and Instapage are offering similar services now

Please note that you need a significant amount of traffic and conversions in order for those tests to give you trusted results which is not available small websites with few conversions/week. For small websites you need to follow the conversion rate best practices (e.g. a prominent call to action) and convention on the web when doing CRO

Data driven:

Technology can provide us with many user behaviour signals without users even knowing that we are doing that (we still have to respect users privacy), few examples for that:

- Mouse movement tracking, you can use CrazyEgg, HotJar or a free service like HeatMap, once you have enough data you should be able to restructure the page to make it more effective for users

- GA (Google Analytics) a good starting point is analyzing pages with a high bounce rate, in most cases this is a signal that users are not finding what they are looking for quickly. Find the top keywords that drive traffic to those pages and make sure that users are getting their questions answered quickly without taking any extra actions like scrolling down or clicking on a tab or a button

- Landing pages with low conversion rate should be analyzed and re-optimized, low conversion rate could be a result of a poorly structured page, it could be also a signal of low trust due to lacking of social proof, adding partner and awards badges, clients or users testimonial could be helpful.

- Slow loading pages which could be found in GA present an opportunity for improvement. Reducing web page speed will have a positive impact on conversion rate

- Low CTR (click through rate) in SERP (search engine result page), this data is available in GSC (Google Search Console), when CTR is low it could be a signal that your content is not relevant enough for that search query or the text snippet that is used by search engines is not good enough, always make sure that title tags and description tags are enticing for users to click, if Google is picking the SERP snippets from another section in the page optimize that text to make more relevant for the target keyword and more attractive for users to click.

There are many other data sources where you can find user behavior signals that you can use to optimize your website for a better user experience and eventually better ranking with search engines

User feedback based:

Surveying website users asking them general questions what do they think about the website or what changes they like to see is a very effective usability improvement tactic, this could be done using services like 5 seconds testing or any other similar testing services where you upload a screen shot of a website and ask people see it for 5 seconds before answering few questions like:

- What services do this website offer?

- What phrases or words do you remember from the website?

- What do you think about the design of the website?

There many solutions to conduct a scurvy on a website depending on the CMS your are using, some solutions that rely on JavaScript embedded codes can work almost on any website, Getsitecontrol is an example for that.

Trust:

I tried my best not to tab into marketing while taking user experience and conversion rate but that is really difficult, you can create the best landing page on the web but it will still convert less than poorly designed landing page if it will not be trusted by users. Think about a bank like Royal Bank offering wealth management service and Joe the Financial adviser offering the same service, even with Joe having a very well optimized landing page and offer the same services for a lower price Royal Bank will have a better chance to convert, here are few tips to over come that:

- Be a brand, create a nice logo and user it consistently, design a professional website, pick clours and fonts for your brand and user them on all marketing material. Advertise on different channels especially community events.

- Use social proof signals like user testimonials, case studies, stats and award winning and mention badges (e.g. as seen as Toronto Star)

- Offer quick communication options like online chat

Finally even if search engines will not use more user experience signals as ranking factors in the future, providing a good user experience should be always a priority. User experience can even affect other areas of SEO like attracting link, do people link to websites where they had a bad user experience with? The answer is no.

Technical SEO

Posted on March 19, 2019Technical SEO refers to the optimization work done on the website technical infrastructure (HTML source code, server side codes, hosting, assets and more) to make it search engine friendly

When it comes to technical SEO there are few buzzwords that you need always to keep in mind:

- Crawlability

- Renderability

- Indexability (Readability )

How do search engines work (mainly Google):

When search engines find a new web page they send their crawlers (a software that behaves like a browser installed on a powerful computer) to read the source code of that web page and save it to the search engines storing servers to be parsed and processed later.

Second step will be parsing that source code, possibly save missing resources like images, CSS and other dependencies if the crawler decides that the content in the source code is readable without rendering (that happens when the content is included in plain HTML or a simple JavaScript format) they will start processing it by turning that source code into structured data (think about your excel sheet, columns and rows) that they will eventually turn into a database, considering the size of the web searching files and returning results quickly is impossible but searching a database and returning results in less than a second is a possibility. In order for search engines to turn web content into a searchable database they need to isolate text from codes, if they find any content with Schema codes (structured data) turning content into database will be a lot easier, HTML elements like title tags and descriptions tags are also easy to process.

If the crawler decides that this is Javascript heavy page that needs to be rendered to find the text content a more advanced crawler will be sent later (some times in few days) to render the page and get it ready for processing, watch the video below to understand how Google's crawlers work.

Renderability was not it a thing when search engines started, it is a very resource intensive and slow process but with more websites using advanced JavaScript framework like Angular (where the source code doesn't have any text content in some cases) search engines started to see a need to fully render the page in order to capture the content. The other benefit of fully rendering the page is understanding the location and the state of the content on the page (hidden content, above the fold or under the fold etc).

Currently the only search engine that does well with rendering is Google, they send a basic crawler at the beginning (it can understand simple JavaScript but not frameworks like Angular) then they comeback after with another crawler that can fully render the page, check this tool to see how a web page renders with Google bot

Once processing is completed a functional copy of the page will be stored in search engines servers, they will make it available for the public eventually using the command cache:URL at this point the page will be fully indexed and able to rank for whatever keywords search engines decide it relevant to based on the quality of the content and the authority of the website (in other words the ranking algorithm).

Crawlability Optimization, Speed and mobile friendliness):

Firs step in making a website crawlable is providing access points to the crawlers like:

- Site map

- Internal links from other pages

- External Links

- RSS feed

- URL submission to Google or Bing Search Console, or using their indexing API if it is available (only available for few industries)

Discuss with your webmaster what happens when a new content is added to the website and make sure there is one or more access point available for the crawler to find that page (ideally sitemap + one or more internal links from prominent pages), modern CMS like WordPress will provide access points automatically when adding a new post but they do not do that when you add a new page and that is where you need to manually modify the information architecture to include a link to that page.

Each piece of content available on a website must have it is own dedicated URL, this URL must be clean and not fragmented using charterers like # . Single Page Application (SAP) is an example of a situation that you need to avoid where the whole website operates based on a single URL (normally the root domain and the reset will be fragmented URLs), in this case technologies like AJAX (mix of HTML and JavaScript) will be used to load content from the database to answer any new page request (#anotherpage), users will not see any issue with that but search engines will not be able to crawl the website since they use dedicated URLs as keys to define a page in their index which is not available in this case as they totally ignore fragment URLs

Search engines have a limit on the number of pages they can crawl from a website in a session and in total they call it crawl budget, most websites (with less than a million pages and not a lot of new content added every day) do not need to worry about that, if you have a large website you need to make sure that new content is getting crawled and indexed quickly, providing strong internal links for new pages and pushing them to the sitemap quickly can help a lot with that.

URLs management: Many websites use parameters in URLs for different reasons, many eCommerce websites use parameters in URLs to provide pages for the same product in different colours or the same product in different sizes (faceted navigation), sometimes search engines will be able to index those pages and they will end up with infinitive number of pages to crawl which can create a crawling issue and also a duplicate content issue. Search engines provide webmasters with different tools to control crawlability and indexability by excluding pages from crawling, ideally if the website is structured well there will be less need to use any of the tools below to influence crawlability:

- Robots.txt, a file located on the root of your website where you can provide rules and direction to search engines how to crawl the website, you can disallow search engines from crawling a folder, a pattern, a file or a file type.

- Canonical tags, <link rel="canonical" href="https://www.wisamabdulaziz.com/" /> you can place them in page B' header to tell search engines that the page with the original content is page A located under that canonical URL. Using canonical tags is a good alternative to 301 as they do not need any server side coding what makes them easier to implement

- Redirects, I mean here the server side redirects (301 for example) which is used to tell search engine that page A was moved to page B, this should be used only when the content on page B was moved to page A. It could be used also when there is more then one page with very similar content.

- Meta refresh, <meta http-equiv="refresh" content="0;URL='http://newpage.example.com/'" /> normally located in the header area, it directs browsers to redirect users to another page, search engines listen to meta refresh, when the waiting time is 0 they will be treat it like a 301 redirect

- noindex tags, <meta name="robots" content="noindex"> they should be place in the header of a page that you do not search engines to crawl or index

The final thing to optimize for crawlbility is website speed which is a ranking factor also, few quick steps you can do to have a fast website:

- Use a fast host, always make sure you have extra resources with your host, if your shared host is not doing the job just upgrade to a VPS or a dedicated server it is a worthwhile investment

- Use a reliable fast CMS like WordPress

- Cache your dynamic pages (WordPress posts for example) into an HTML format

- Compress and optimize images

- Minify CSS and JavaScript

To test your website you can use different speed testing tools listed here

Google Bot For Mobile:

With mobile users surpassing desktop users few years ago mobile friendly websites are becoming more important to search engines and web developers, search engines like Google have created a mobile crawler to understand more how the website is going to look for mobile users, when they find a website ready for mobile users they set their mobile crawler as the default crawler for this website (what they call mobile first), there are few steps you can take to make sure your website is mobile friendly (for both users and crawlers):

- Use responsive design for your website

- Keep important content above the fold

- Make sure your responsive website is errors free, you can use GSC for that or Google Mobile Friendly Test

- Keep the mobile version as fast as possible, if you can not do that for technical or design reasons consider using Accelerated Mobile Pages (AMP)

Renderability Optimization:

The best SEO optimization you can do for renderability is to remove the need for search engines to render your website using second crawling (advanced crawling), if your website is built around some advanced JavaScript platform like Angular is strongly recommended to give crawlers like Google bot a pre-rendered HTML copy of each page (regular users can still get the Angular format of the website, this practice is called dynamic rendering), this could be done using built in feature with Angular Universal or using third party solutions like Prerender.io

Do not block resources (CSS, JavaScript and images) that are needed to render the website, back in the days webmasters used to block those resources using robots.txt to reduce the load on the server or for security purposes, inspect your website using Google Search Console, if you see any blocked resources that are needed for Google to render the website discuss your web developer how you can safely allow those resources for crawling

Indexability Readability (Schema)

When a website is optimized well for crawlability and renderability, Indexability will be almost automatically taken care of, the key point for indexability is providing a page with a dedicated clean URL that returns unique content with a substance and loads fast so search engines can crawl and store in their severs.

Content that can cause indexability issues:

- Thin content as it may not be kept in the index ..

- Duplicate content

- Text content in images, text in SWF files, text in a video files and text in complex JavaScript file, this type of content will not make its way to the index and it will not be searchable in Google

Content that can help search engine in parsing and indexability:

- Structured data mainly Schema can help search engines to turn content into a searchable database almost without any processing, eventually that will help your website to have rich results in the SERP (example for that is the five stars review that Google adds for some websites)

- Using HTML markup to organize content (i.e. <h2>, <strong>, <ol>, <li>, <p>) will make it easier for search engines to index your content and show it when applicable in their featured snippets like the answer box.

Monitoring and errors fixing:

Contentious monitoring of websites crawlability and indexability is key to avoid any situation where part of the website becomes uncrawlable (could be your webmaster adding noindex tag to every page on the website), there are different tool that can help you with that:

- Google Search Console (GSC), after verifying your website with GSC Google will start providing you with feedback regarding your website' health with Google, the index coverage is the most important section in the dashboard to keep eye on to find out about crawlability and indexability issues. Google will send messages through the message centre (there is an option to forwarded to your email) for serious crawlability issues

- Crawling tools: SEMrush, Ahrefs, Oncrawl, Screaming Frog can be helpful to find out about errors

- Monitor 404 errors in Google Analytics and GSC, make sure to customize your 404 error pages, add the words "404 not found" to the title tag so it becomes easier to find 404 error pages using Google analytics

- Monitor indexability, check if the number of indexed pages in GSC make sense based on the size of your website (should not be too big or too small comparing to the actual number of unique pages you have in your website)

- Monitory renderability using the URL inspection tool in GSC, make sure Google can render that pages as close as possible to how users can see it, pay attention to blocked resources that are required to render the website (the URL inspection tool will notify you about them)

Index coverage monitoring and analysis definitely needs to be a service that you offer to your clients as an SEO specialist, a GSC monthly or quarterly audit is strongly recommended.

Next step: user experience and conversion rate optimization

Off page SEO And Link Building

Posted on March 17, 2019Off page SEO refers to all activities you do that do not require making any changes to your website or your content but it can still help with ranking, the most popular off page SEO activity is link building, before explaining more about link building I want you to be aware that historically the harshest penalties applied to websites by Google are all driven by link building activities that are against Google' guidelines. I have been through this myself as I relayed on artificial link building activities (mainly article syndication with keyword rich anchor text) and ended up with manual actions for some websites in March 2012 then hit again by Penguin update in April 2012. So take your time to learn more about link building before start acquiring any links or hiring someone to do that for you.

Why inbound links are the backbone of Google's ranking algorithm?

Think how marketing happens in the offline world, you can say anything you want about yourself in a resume but at the end of they day the employer will call some referrals to verify that. When we hire a business or buy a product we try to find other people that use them before and hear their experience (in other words reviews). We watch celebrities doing TV ads all the time and as marketers we know those ads work well as people like and trust those public figures so an endorsement from them will be higher impact on people. Ask yourself if you have the opportunity to choose between putting the prime minster of Canada as a referral on your resume or put a 15 old child what do you change

Let us take the above analogy to the web, you can see anything on your website and do a perfect on page SEO, but so other 1000 websites so how search engines can rank a 1000 website or more optimized well for a keyword like "web hosting"? In the early days of search engines Yahoo could not figure out a solution for that and they ended up showing spammy and low quality results which opens the door for Google to come up with Page Rank (will talk about it more here) which is a scoring from 0-10 for every web page in Google' index based on the page rank and number of links pointing back (voting for) to that web page, and they gave PR a big weight in their algorithm, and the result was a huge improvements in the quality of their results

What makes a quality link:

When I started doing SEO in 2004 and until 2010 the answer was clear and easy it is Google' Page Rank you simply need to get as many links as possible from pages with high Page Rank (hopefully 10 if you can), in 2010 Google started to delay page rank updates then totally stopped updating page rank before totally making PR invisible to all webmasters, that doesn't mean it is not used by Google any more, it is still a big part of their algorithm but it means webmasters can not see it anymore. Many third party tools saw an opportunity with that and started building their version of page rank (DA PA by MOZ, TF CF by Majestic, UR DR by AHREFs , Authority Score by SEMrush) but none of these was successfully able to replace PR, new people to the SEO industry and in-house manager swear by some of them like DA, link traders using them a lot to sell links also.

What is the public metric that you can safely use to evaluate the quality of a web page? How to do link prospecting? The answer is not very difficult considering that there are two metrics provided by Google that we can use to decide the quality of a link:

- The ranking of that page of keywords relevant to our target keywords, could be done simply by searching Google for that keyword. This is a manual process that can take a long time but it works so well

- The organic traffic of the root domain which you can find using services like SEMrush and Ahrefs, if you are asking how do they get those numbers assuming they do not have Google Analytics access to all websites on the web, they actually guess it with a good level of accuracy, thy have the ranking of billions of keywords in their database including their search volume, not only that they know the first 100 websites that rank for every one of these keywords, so by applying some database quires with some educated guessing work (for example #1 ranking gets 25% of clicks, #2 12% etc) they can predict the monthly organic traffic. For link prospecting purposes this number doesnt have to be accurate, as far as this domain has 500+ organic visit according to SEMrush for example then the link is worthy

- The relevancy of the link is a helping factor also, not only for search engines but also they can generate useful traffic that can turn into business. If you are providing link building services you need to worry more about relevancy as many customers can not differentiate link building from branding an public relationship, they will not be happy to see a link form an irrelevant website or poorly designed website.

- A good link (from SEO prospective) should not be contained in a "nofollow" tag which was created by search engines to signal not passing any authority or link juice to the destination URL <a href="https://www.targeturl.com" rel="nofollow">click here</a>

Link profile Anatomy:

It is very important to analyze the link profile on any new website your are working on to decide if you need a link building campaign or not, tools like SEMrush, Majestic and Ahrefs can help you a lot with that, they also can help you to conduct a link profile competition analysis to find the link gap with other competitors. Analytics the anchor text will be also very helpfully to decide the gap with the competitors, in some case analysis the anchor text can reavle some link building activities that are again Google' guidelines, if you find these for your client then you need to find out more about the link building campaign that led into those links, if those links caused a big damage you need to make that clear to your client and lower their expectations about the success of your own link building campaign.

Then you need understand the type of links your client is getting and how are they acquired, this will help you to find opportunities and build your link building strategy.

Natural links:

If you are an active business in North America you will be naturally attracting links for business profile pages like Yellow Pages and business directories. Other business avenues that you are registered in like chamber of commerce will link back to you also. Large businesses will have partners that link back to them, they are also in the news on a regular basis (for good or bad) which attracts more links, people talk about on forums and link back to them, they sponsor lots of events and they do digital PR.

This is where you hope to see the majority of the links in a client link profile, if you are working with a big brand you will see sometimes million of links coming organically without anyone from the client's side trying to build them, for client like that you can focus more on on page SEO if the competition analysis shows that there is no big gap in the link profile.

Editorial links:

Editorial links refers to links that you gain by having great content on your websites that got bloggers and news website to link back to it, this type of links is very valuable is it comes in most cases from content rich pages, it is normally contextual with a keyword rich anchor text (depending of the content you have)

Examples of content that can attract editorial links:

- Original research and surveys

- Case studies, guides, white papers and infographics

- Interviews

- Funny or Entertaining original materials

- Tools and calculators

- Blog posts if you are the authority in your space

Please note that in some cases especially when you do not have your own user base (subscribers or followers) you need to do some content marketing to get people with interested aware with this content, here few content marketing activities you can do:

- Blogger outreach (mainly relevant blogs) and influences outreach in your space, there are many tools and search queries to find them, write a nice email explaining how this content can benefit their users

- Social media marketing: promote your content on your social media accounts and give it a boost using paid social media marketing like Facebook, the level of targeting you can reach with Facebook is unprecedented and can put your content quickly in-front of the right people, native advertising with websites like Taboola could be an option also

- Share it on relevant forums where possible without too much self promotion, check the guidelines of any forum sometimes sharing third party content even if it is very useful is not allowed

Manually created - (not editorial but also not paid)

Manually created links are not against Google's guidelines but in some cases they are. When you artificially build a dofollow link you can control the anchor text and when you used a keyword rich anchor text that matches exactly your keyword you could be violating Google's guidelines

Popular forms of manually acquired links in 2019:

- Digital press releases

- Guest posts

- Forums signature

- Embeddable widgets and infographics

With artificial link building you could be easily tapping into the gray or the black area and violate Google's guidelines, two rules that can keep you safe form doing that:

- Make sure that manually created links to don't contribute to a large percent of your link profile

- Avoid using keyword rich anchor text while you are doing link building

Paid links:

Exchanging links for money is totally against Google' guidelines, many people are still doing it, lists with websites selling links based on DA still circulating the web. I do not recommend buying links however; if you decide to do it in the future keep the same quality standards that you use for outreach, the website should have organic traffic, add value to users and relevant to your website.

Machine Generated links (automated):

Those links are generated by a software totally against Google's guidelines, those software target websites that allow users generated content (mainly forums and blogs) and submit auto generated content with link, this was a big thing back in the days but it almost has 0 impact on ranking as of 2019, also chances to see any clients with this type of links is very low now unless a competitor is trying to do negative SEO for them which also has a very low chance to work.

Xrumer, SEOnuke, Scrape box are poplar software that are used for auto generated links, their main targets are blog comments, forums and social media profiles

Branding and reputation signals:

Google never admitted using branding signals as a ranking factor but that seems to be hard to believe, let us check what branding signals could be available for Google:

- Mentions, could be the brand name mentions or the domain name mention without a link

- links with the brand as an anchor text and

- The search volume of the brand name

All the signals about are almost fool proof for Google, even if Google is not using them for now they are very good comparison point for competition analysts especially the brand name search volume, if your competitors brand name 10 times more search than your then they are doing better job in marketing and most likely they will rank better in search engines for different reasons, one of them is attracting more natural links as people tend to talk and link more to big brands

Social signals as a ranking factor:

I believe Google when they say they do not use social signals like likes and shares in their ranking algorithm but I know for sure when content gets good exposure on social media it tends to attract more link, actually it is very popular for content marketer to boost their content on social media outlets like Facebook hoping for users that how the power of linking (bloggers for example) to find it and link back to it

Online Reputation and Customer reviews GMB (Google my business):

When do any local search like city + dentist you will see 3 local results above the organic results which we call the 3 pack, the 3 pack includes business information like location and phone number it also includes reviews. Google did not admit using reviews as a ranking factor but it will be difficult to believe that Google will have no problem ranking a one star business in the 3 pack or even on the first page organically

The link building process (mainly for blogger outreach)

Find if your customer needs links or not at least at the beginning, you can do quick link profile comparison using Majestic or Ahrefs to find if there there is a link gap or not. In most cases especially when you are working with big brands link building will not be your low hanging fruit, you will be better off starting with on page optimization and a technical SEO audit.

Explore your likable assets: Building links to the company's home page is not easy and it will not look natural, building linkable assets like cases studies or ifnographics can open the door for editorial links to come and makes your blogger outreach task easier. If you find good to go linkable assets you can start your outreach immediately otherwise you need to build them

Start link prospecting using tools like Pitchbox, Buzz stream or Google (search for keyword + blog), then build your list of blogs to reach out to

Write personal friendly email explaining how the blogger's users will benefit from linking to this content.

Next step: nteractive on page optimization and content maintenance

Tracking and Analytics

Posted on March 17, 2019"If you can not measure it, you can not improve it" results measurement is vital in any marketing campaign, luckily when it comes to online marketing there is almost nothing that could not be measured. There are many metrics that SEOs need to keep eye on for different reasons:

- They are ranking factors (e.g. website speed)

- They are success signals (e.g. more organic traffic)

- They are alarming signals that need to be investigated (e.g. ranking with Google is declining or GSC sending error notifications)

- They present opportunities (e.g. keywords that rank on the second page can easily make their way to the second page with some optimization)

There are a lot of SEO KPIs to track, so before starting any project you need to sit with the project's stakeholders and discuss what KPIs need to be tracked, have not said that there are many standard (universal) SEO KPIs that most SEOs track that I will focus on in the next section.

Organic traffic tracking metrics:

The metrics below could be tracked using Google Analytics (GA), Google Search Console (GSC) and Google My Business. Please note that GSC and GMB do not save a long history of data so you need to make sure you have a solution that keep the data stored permanently

- Number of organic landing pages (GA)

- Head (top) keywords ranking (GSC or third party tools)

- Average position/ranking for all keywords (GSC)

- Number of indexed pages (GSC)

- Pages with errors (GSC and GA)

- CTR (click through rate) (GSC)

- Bounce rate (GA)

- Time on site (GA)

- Pages per session (GA)

- Number of keywords ranking on the first page (GSC with some processing)

- Pages that attract zero traffic (GSC + site crawling data)

- Pages and keywords that are losing traffic (GSC)

- Google My Business GMB, impressions, directions, phone calls and clicks (GMB)

It is very important to understand the average values of the metrics above for your industry and set objectives/forecasts for every new project with a clear plan to achieve those forecasts

Website performance, UX, CRO and ROI

There are a lot of metrics to create and track that can help with UX and CRO

For UX many of them are mentioned above:

- Bounce rate

- Time on site

- Number of pages per session

- SERP CTR

- Event tracking (e.g. video play duration and links clicking)

For CRO:

- Goal tracking (e.g. tracking form submissions)

- Ecommerce tracking for websites that sell online products

- Connecting CRMs to Google analytics to track sales (e.g. connect SalesForce to Google Analytics)

- Audiences building and tracking

- Phone calls tracking by medium/source

- Clickable phone number event tracking (this is for mobile users that click to call on a phone number)

It is very important to report on the financial success of the SEO campaign, one way to do that is ROI (return on investment) which is simply ((Monthly Earnings - Monthly SEO retainer)/Monthly SEO retainer) X 100 some people go with revenue instead of earning. While doing this you need to consider the customer life time value, for a client that offers web hosting where customers rent servers and keep them almost for life the monthly earning or revenue will not reflect the actual value of this customer, if they are selling $200/month dedicated servers with 30% profit then the earning of one sale should be 0.3 X 200 X (customer average lifespan let us say 36 months) = $2160 .. so if you are paid $1080/month for your SEO services and you are able to generate one sale for this client the ROI of the SEO services will be 100% in this case

Another way to justify ROI if you do not have access to the client financial data is going by the click value, if you have a personal injury lawyer client where a click using Google Search Ads can cost a $100 or more for some keywords, being able to drive 50 clicks/month from relevant keywords can justify an SEO spend of $2000 or more

Competition analysis:

Picking the right tools to do competition analysis is key to come up with any meaningful insights, in order to keep it apple to apple you can not use GSC to measure your own traffic then use SEMrush to measure competitors traffic, just use the same tool for everyone.

We are very lucky to live in an era where tools like SEMrush and Ahrefs are available to provide very useful link graph and traffic data for almost any website on the web, the key metrics to track while doing competition analysis are:

- Referring domains

- Inbound links

- Organic traffic

- Ranking for highly searched keywords

- Number of keywords on the first page

- Number of indexed pages

All of them are available using SEMrush with an exception of number of indexed pages which you can use Google site:domain.com modifier to track them

The most popular tools used by SEOs for tracking key metrics:

- GMB (Google My Business)

- GSC (Google Search Console)

- GA (Google Analytics)

- GTM (Google Tag Manager)

- SEMrush

- Ahrefs

- Google Data Studio

Offering tracking services:

With tools like GA and GTM tracking is becoming a service on it own that SEOs can provide to their clients, here are few areas where clients need help:

- GA optimization, Google recommends creating multiple views in each property, each property needs it is own filters and goals

- Mapping events and goals: After a long time using the same view and many parties adding their own filters, goals, audiences and customization there will be a need for someone to come and map all of this and do some clean up

- Many clients end up with 100s of tags and triggers in their GTM where it becomes very difficult to navigate and understand so it needs some mapping and clean up

- Self submission forms and complex events tracking need GTM and GA experts to implement them

- Ecommerce tracking is normally difficult for many webmasters to implement on their own unless it is natively supported by the shopping cart software (e.g. Shopify)

- Creating GA and GDS custom dashboards

Gaining advanced knowledge in GA, GSC and GTM is vital for your SEO career, make sure you check the available certificates here that can help you to gain and validate the tracking knowledge you need to support your SEO career

Winning Content and Professional Design

Posted on March 17, 2019Content creation might not be the specialty of SEOs (link building was able to get mediocre content to rank) but the updates made by Google in the recent years made content creation the backbone of SEO. Nowadays gaining high ranking relying on traditional SEO tactics like on-page and off page SEO without a winning content is not possible or at least it will be facing a head wind, the opposite applies wining content will be a tail wind and requires less SEO efforts

What is a winning content?

When you are trying to rank for a specific keyword a winning content is content that answers the search query quickly with a good user experience, there are two ways to find winning content:

- Put your self in the user's shoes and ask your self is this content giving you the best answer for what you searched for

- Google the keyword you are creating content and visit the top 5 or 10 results to see what is Google favoring

Content has different formats that can go beyond text to images, videos and applications. Let us take the keyword "mortgage amortization schedule", a winning content for this keyword will be a page that has:

- A web app (mortgage amortization calculator using JavaScript)

- A chart showing the payment schedule (image format)

- Advice about the best amortization rate and payment schedule (text content)

E-A-T (Expertise Authority Trustworthiness) and content

This term is taken form the Google' quality raters guidelines where they ask their human raters to evaluate the website expertise in the topic, authority and trustworthiness. It is easy to conclude that achieving a good E-A-T score is impossible without creating good content.

Please note that E-A-T is not a ranking factor but historically Google always starts a process using humans then build an algorithm to replicate it, a good example for that is their Penguin update that was launched in 2012, they started sending manual actions using human reviewers before making that process machine driven using Penguin Update.

The process of building a winning content:

Before creating any page you need to decide the purpose of that page, finding one or two target keywords is a good start, keywords are questions asked by users to the search engines, search engines main interest is putting the best answer at the top (that is how they keep users happy and coming back to use them again). If your content is not the best or among the best that can answer user's question then you are doomed for failure eventually, even if it is working fine now it will not in the next algorithm update.

Content creation considerations:

- How much text to do you need to answer the user's question, long text format or short text format?

- Do you need media content like videos, audios, images, infographics and APPs?

- The page that contains the content has to be part of a professionally designed website that provides a good user experience

- The content must be well formatted an easy to read

- Add new images and be sure to optimize those images with alt tags and other image optimization tactics.

- Do you need to include case studies and data?

- Do you need to optimize for enhanced SERPs where possible?

Why winning content is a tail wind?

- Winning content doesn't mean that your page will rank at the top of the SERPs automatically but it gives SEOs the peace of mind that their efforts will have a better chance to work as it will remove a major ranking impediment (lacking of winning content)

- Winning content has also a better chance to be shared on social media website and eventually attract more links

- Winning content will get a website to be noticed as authority in the space which eventually will improve branding and reputation

Professional Design:

Hosting great content on a poorly designed website with a bad user experience will cause multiple issues:

- Less people will share poorly designed websites

- It reduces the trustworthiness of the content

- It will negatively affect user experience which will be reflected on bounce rate, time on the site and number of pages per session

Conclusion:

Before taking any SEO project make sure the website is professionally designed (looking good, easy to use and has a nice logo) also make sure it has winning content for the target keywords otherwise you will be facing a headwind with your SEO efforts with good chance to miss the SEO campaign's objectives

If you feel good about the website and the content let us move to the next step keyword research and content mapping

SEO Job Interview Questions You Need to Be Ready to Answer

Posted on February 14, 2019As you are reading this post I hope you have written a good SEO resume and got your first interview, I am going to go through the basics of an interview before going to the technical SEO questions

- Expect more than one person to be in that interview (a technical person and an HR person), some companies do a preliminary interview (could be over the phone) to ask some question and do some personality check before bringing you to an onsite interview

- Research well what does the company (your future employer) do, see also if you can find their HR person and their marketing manager on Linkedin and read more about them

- Remember the old saying "be on time be prepared" try to arrive early, possibly an hour early where you can stop by a close coffee shop for a while then go to their office and arrive 10 minutes earlier than you interview time

- Dress professionally

- Greet respectfully with a smile and mind culture and personality differences, I live in Toronto it is a multicultural city you never know the background of the person interviewing you, to be on the safe side just stand up for them when they approach you and do no initiate a hand shake just wait for them

- Remind yourself every minute during the interview that there are interpersonal skills and personality checks going all the time, failing in the personality check may lead you to lose that opportunity no matter how technically good you are

- Breath well, do not talk with your hands too much, speak clearly and slowly

In the second part of this post I will take you through the most popular technical SEO questions that you may face in an interview (I will leave it to you to research the answers, if you have any questions leave them in the comment section):

- How deep is you keyword research experience? What is intent keyword research? what is your favorite keyword research tool?

- Crawling, rendering and indexing, what are they and who does them?

- What is your favorite website crawling technology

- How important a site map is?

- GSC (Google Search Console) experience? what are the most important sections? what do you do if you find bad links pointing back to your website in GSC? how long GSC saves data for a website?

- Have you used Majestic or Ahrefs before? How to find the best links your competitors have using those tools?

- Client move from a page A to page B (The URL was changed) what is the best practice to avoid organic traffic loss

- 301 redirect VS 302 VS meta refresh VS JavaScript redirect VS canonical can you explain the difference?